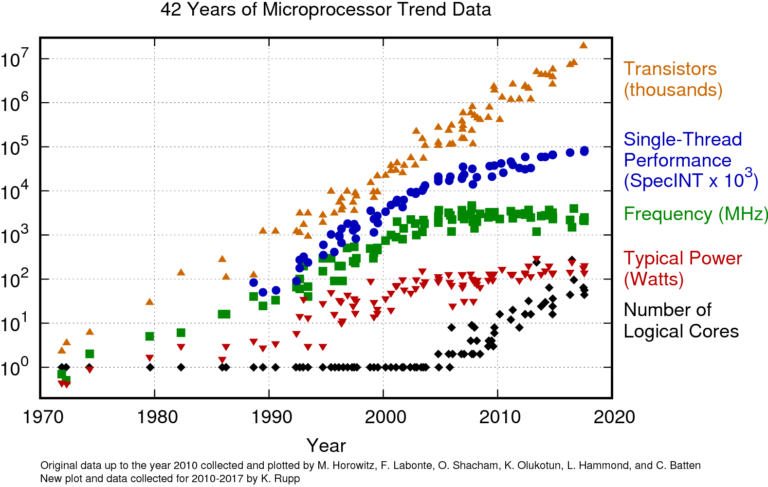

Since the dawn of the age of personal computers, there’s been a remarkably persistent idea: that computers are advancing at such a rate, that they will approximately double in processing power every two years. The idea was first put forward by Gordon Moore - a co-founder of Intel – back in 1965, and later became known as Moore’s Law. While it’s common for economic theory to tout the idea of continued exponential growth, such an idea is not nearly as common when applied to the physical world, so the demonstrated truth of this “law” over the past 50 years with relation to the computer industry is pretty remarkable. Part of what has made this possible is that processing power has not been bound by clock speed alone. You might have noticed that modern clock speeds are not measured in Terahertz! Instead, barring the use of sophisticated liquid cooling methods, 5GHz seems to be just about the practical limit for a microprocessor.

Undaunted, the computer industry has managed to sidestep this limitation fairly successfully by basically throwing more processors at the problem, e.g. with the advent of multi-core processors , which were first produced back in 1996. High-end Intel Xeon processors currently offer as many as 28 cores on a single chip, and AMD has recently announced one with no less than 64 cores.

But that’s not to say that the cracks aren’t beginning to show. Intel themselves have suggested that Moore’s law is effectively over in its present form. Barring a large-scale shift away from silicon transistors it’s arguably not possible to keep improving so dramatically, and it’s certainly not cost effective. It’s not clear what silicon’s successor might be, or whether it can provide anywhere near the kind of gains we have seen in the past.

It should also be noted that Moore’s law is not the only axiom that applies. There is also the concept of Dennard Scaling which broadly states that as processors get faster, their power use is in proportion to the physical area the chip occupies — not the number of transistors. This was great news in the early days of clock speed improvement because it meant that even though chips were getting more powerful, the power they consumed was broadly constant. Unfortunately, that doesn’t apply to multi-core processors in the same way, and since 2006 this concept seems to have broken down.

So, as the need for processing power has increased proportionally to the promises of Moore’s law , manifesting most apparently in today’s broad migration to “cloud computing”, we have started seeing a proportional rise in the power needed to run all this silicon. All of this is happening at the same time that we’ve finally started waking up to the fact that our hunger for energy is set to vastly outstrip the power we can safely produce, with environmental degradation an inevitable side effect.

So, what's next?

Will we see the pace of innovation inevitably slow? On the contrary, innovation is the only thing that can overcome this obstacle, but it will require a shift in how we approach it. Perhaps the next step is not represented by vast, centralised data centres, but rather large-scale distributed networks. An obvious possibility for manifesting this future is to make use of the vast processing power available in all of our cumulative pockets – e.g. the smartphone. We’ve all come to rely on the capabilities that these pocket-computers enable, but it’s easy to forget just how powerful they have become. Some numbers to digest: computing power is measured in something called Flops. The first “supercomputer”, the Cray 1 was capable of about 160Megaflops. The Samsung Galaxy S8, a fairly typical flagship smartphone from 2017, has an 8-core processor capable of 13.4Gigaflops, or about 80X more powerful than the Cray. Even today, all of the data centres in the world combined have a computing power measured in Petaflops, perhaps approaching 1 Exaflop. But there are estimated to be 3.5 billion smartphones in the world which means that the power available on all the smartphones in the world is measured not in Exaflops but in dozens of ZETTAFLOPS! What does that all mean? If it can be harnessed, there are several orders of magnitude more power in our collective pockets than in all the data centres in the world.

So, how can it be utilised?

It’s an interesting question. Raw power is of course not the only consideration, instead distribution of the workload is the primary challenge. A project out of the University of Helsinki called Ubispark provides a framework for doing large scale computing using the left-over cycles of mobile phones that are charging overnight. They’ve calculated that nine Galaxy S4 smartphones working together have roughly the same processing power as a single typical web server, but crucially due to their optimisation as mobile devices are 50–90% more power efficient.

Of course, convincing billions of people to voluntarily devote their phone to solving the world’s super-computer problems is a challenging prospect, since it brings them no immediate benefit. But the same principal can be applied to any company that has data processing needs and a corresponding audience of customers with the company’s app installed on their smartphone. So why isn’t this practice more widespread? There are many reasons, some technical some systemic, but we are starting to see changes in the market, which I’ll discuss in Part 2.

The power of the cloud has limits, and the greatest improvements in computing value for the next decades are likely to be realised by making use of distributed computing resources. Next time I’ll take a look at how new technologies in the mobile development landscape have the potential to bring big changes to big data.

WRITTEN BY

Elliot Long

Elliot has been developing apps for mobile devices since 2005. Originally from Silicon Valley, he attended university in Birmingham, UK and has worked with various startups and SMEs in the UK, Spain, and Germany. Since 2018 he’s been overseeing all aspects of mobile software development for Snowdrop Solutions.